Description

Hadoop is a revolutionary open-source framework for software programming that took the data storage and processing to next level. With its tremendous capability to store and process huge clusters of data, it unveiled opportunities to business around the world with artificial intelligence. It stores data and runs applications on clusters in commodity hardware which massively reduces the cost of installation and maintenance. It provides huge storage for any kind of data, enormous processing power and to have all kinds of analytics such as real-time analytics, predictive analytics data and so on at a click of a mouse. The volume of data being handled by organizations keeps growing exponentially with each passing day! This ever-demanding scenario calls for powerful big data handling solutions such as Hadoop for a truly data-driven decision-making approach. Students who start as Hadoop developers evolve into Hadoop Administrators by the end of a certification course and in the process guarantee a bright future. Become a certified Hadoop professional to bag a dream job offer. Acquiring proper training on Hadoop technology would definitely be a boon to professionals in terms of using Hadoop resources effectively and save huge time and effort.

Did you know?

It is noticeable that the world is revolutionized by data and Information Technology. More than ‘2.5 quintillion bytes of data is created daily across the globe. Surprisingly, the data developed in last 2 years accounts for 90% of the entire data in the world! every day this rate of data creation is increasing rapidly. Big Data professionals play a vital role in this tremendous evolution as they are responsible to handle such huge volumes of data!

Why learn and get Certified in Big Data and Hadoop?

1. Leading multinational companies are hiring for Hadoop technology – Big Data & Hadoop market is expected to reach $99.31B by 2022 growing at a CAGR of 42.1% from 2015 (Forbes).

2. Streaming Job Opportunities – McKinsey predicts that by 2018 there will be a shortage of 1.5M data experts (Mckinsey Report).

3. Hadoop skills will boost salary packages – Average annual salary of Big Data Hadoop Developers is around $135k (Indeed.com Salary Data).

4. Future of Big Data and Hadoop looks bright – The world’s technological per-capita capacity to store information has roughly doubled every 40 months since the 1980s; as of 2012, every day 2.5 exabytes (2.5×1018) of data is generated.

“Technology professionals should be volunteering for Big Data projects, which make them more

valuable to their current employer and more marketable to other employers” – (Dice.com)

Course Objective

After the completion of this course, Trainee will:

1. Expertise in writing customize Java MapReduce jobs to summarize data and helps in solving common data manipulation problems

2. Knowledge in Debugging and implementation of workflows and common algorithms are the best practices for Hadoop development

3. Capability to assist an individual to create custom components such as input formats and writable comparables to manage difficult data types

4. Ability to understand comprehend Advanced Hadoop API topics

5. Expertise in Hadoop ecosystem projects like leveraging Hive, Oozie, Pig, Flume, Sqoop etc.

Pre-requisites

There are no prerequisites as such for learning Hadoop. Knowledge of Core Java and SQL skills will help, but certainly not a requirement. If you want to learn Core Java, ZaranTech offers the students a complimentary self-paced course in “Core Java” when you enroll in our Big Data Hadoop Certification course.

Prepare for Certification

Our training and certification program gives you a solid understanding of the key topics covered on the certification exams. In addition to boosting your income potential, becoming certified Professional will provide you to display your ability and expertise in the relevant domain. Upon completion of course, students are encouraged to proceed to study and register for the Cloudera Certified Developer for Apache Hadoop (CCDH) Exam. Once the students successfully clears the online exam, they are eligible for CCA & CCP Certifications by Cloudera. The Developer program includes two certification tiers – Cloudera Certified Associate (CCA175) and Cloudera Certified Professional (CCP-DE575).

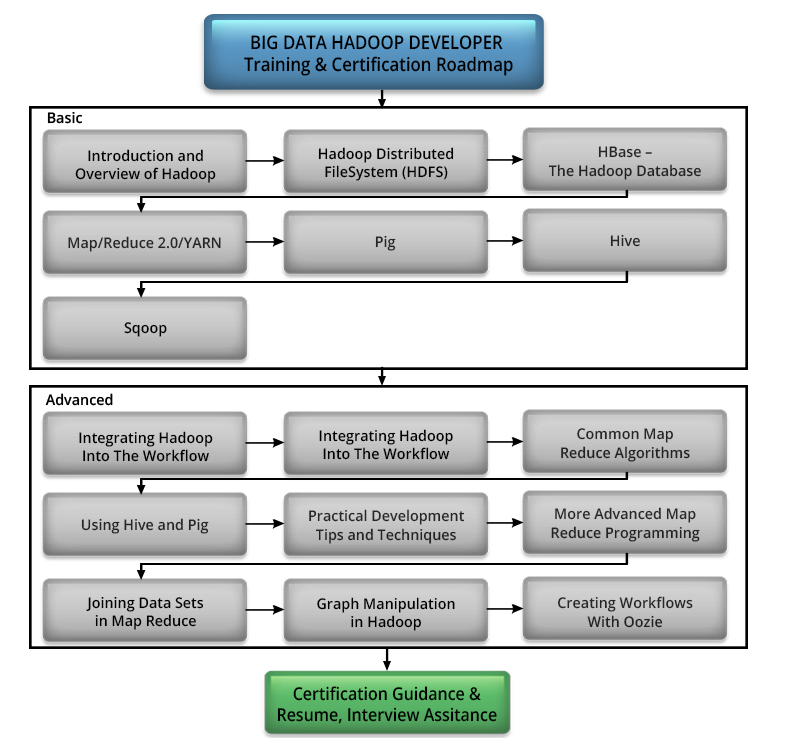

Basic

Unit 1: Introduction and Overview of Hadoop

- What is Hadoop?

- History of Hadoop.

- Building Blocks - Hadoop Eco-System.

- Who is behind Hadoop?

- What Hadoop is good for and what it is not?

Unit 2: Hadoop Distributed FileSystem (HDFS)

- HDFS Overview and Architecture

- HDFS Installation

- HDFS Use Cases

- Hadoop File System Shell

- File System Java API

- Hadoop Configuration

Unit 3: HBase – The Hadoop Database

- HBase Overview and Architecture

- HBase Installation

- HBase Shell

- Java Client API

- Java Administrative API

- Filters

- Scan Caching and Batching

- Key Design

- Table Design

Unit 4: Map/Reduce 2.0/YARN

- Decomposing Problems into MapReduce Workflow

- Using JobControl

- Oozie Introduction and Architecture

- Oozie Installation

- Developing, deploying, and Executing Oozie Workflows

Unit 5: Hive

- Hive Overview

- Installation

- Hive QL

Advance

Unit 1: Integrating Hadoop Into The Workflow

- Relational Database Management Systems

- Storage Systems

- Importing Data from RDBMSs With Sqoop

- Hands-on exercise

- Importing Real-Time Data with Flume

- Accessing HDFS Using FuseDFS and Hoop

Unit 2: Delving Deeper Into The Hadoop API

- More about ToolRunner

- Testing with MRUnit

- Reducing Intermediate Data With Combiners

- The configure and close methods for Map/Reduce Setup and Teardown

- Writing Partitioners for Better Load Balancing

- Hands-On Exercise

- Directly Accessing HDFS

- Using the Distributed Cache

Unit 3: Common Map Reduce Algorithms

- Sorting and Searching

- Indexing

- Machine Learning With Mahout

- Term Frequency – Inverse Document Frequency

- Word Co-Occurrence

Unit 4: Using Hive and Pig

- Hive Basics

- Pig Basics

Unit 5: Practical Development Tips and Techniques

- Debugging MapReduce Code

- Using LocalJobRunner Mode For Easier Debugging

- Retrieving Job Information with Counters

- Logging

- Splittable File Formats

- Determining the Optimal Number of Reducers

- Map-Only MapReduce Jobs

Unit 6: More Advanced Map Reduce Programming

- Custom Writables and WritableComparables

- Saving Binary Data using SequenceFiles and Avro Files

- Creating InputFormats and OutputFormats

Unit 8: Graph Manipulation in Hadoop

- Introduction to graph techniques

- Representing graphs in Hadoop

- Implementing a sample algorithm: Single Source Shortest Path

Unit 9: Creating Workflows With Oozie

- The Motivation for Oozie

- Oozie’s Workflow Definition Format